OK… I’ll say it. The whole idea of an Architecture Review Board may be wrong-headed. That officially puts me at odds with industry standards like CobiT, ongoing practices in IT architecture, and a litany of senior leaders that I respect and admire. So, why say it? I have my reasons, which I will share here.

CobiT recommends an ARB? Really?

The CobiT governance framework requires that an IT team should create an IT Architecture board. (PO3.5). In addition, CobiT suggests that an IT division should create an IT Strategy Committee at the board level (PO4.2) and an IT Steering committee (PO4.3). So what, you ask?

The first thing to note about these recommendations is that CobiT doesn’t normally answer the question “How.” CobiT is a measurement and controls framework. It sets a framework for defining and measuring performance. Most of the advice is focused on “what” to look for, and not “how” to do it. (There are a couple of other directive suggestions as well, but I’m focusing on these).

Yet, CobiT recommends three boards to exist in a governance model for IT. Specifically, these three boards.

But what is wrong with an ARB?

I have been a supporter of ARBs for years. I led the charge to set up the IT ARB in MSIT and successfully got it up and running. I’m involved in helping to set up a governance framework right now as we reorganize our IT division. So why would I suggest that the ARB should be killed?

Because it is an Architecture board. Architecture is not special. Architecture is ONE of the many constraints that a project has to be aligned with. Projects and Services have to deliver their value in a timely, secure, compliant, and cost effective manner. Architecture has a voice in making that promise real. But if we put architecture into an architecture board, and separate it from the “IT Steering Committee” which prioritizes the investments across IT, sets scope, approves budgets, and oversees delivery, then we are setting architecture up for failure.

Power follows the golden rule: the guy with the gold makes the rules. If the IT Steering committee (to use the CobiT term) has the purse strings, then architecture, by definition, has no power. If the ARB says “change your scope to address this architectural requirement,” they have to add the phrase “pretty please” at the end of the request.

So what should we do instead of an ARB?

The replacement: The IT Governance Board

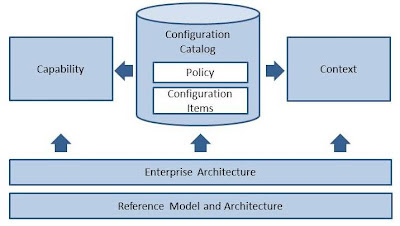

I’m suggesting a different kind of model, based on the idea of an IT Governance Board. The IT Governance Board is chaired by the CIO, like the IT Steering committee, but is a balanced board containing one person who represents each of the core areas of governance: Strategic Alignment, Value Delivery, Resource Management, Risk Management, and Performance Measurement. Under the IT Governance Board are two, or three, or four, “working committees” that review program concerns from any of a number of perspectives. Those perspectives are aligned to IT Goals, so the number of working committees will vary from one organization to the next.

The key here is that escalation to the “IT Governance Board” means a simultaneous review of the project by any number of working committees, but the decisions are ALL made at the IT Governance Board level. The ARB decides nothing. It recommends. (that’s normal). But the IT Steering committee goes away as well, to be replaced by a IT Steering committee that also decides nothing. It recommends. Both of these former boards become working committees. You can also have a Security and Risk committee, and even a Customer Experience committee. You can have as many as you need, because Escalation to One is Escalation to All.

The IT Governance board is not the same as the CIO and his or her direct reports. Typically IT functions can be organized into many different structures. Some are functional (a development leader, an operations leader, an engagement leader, a support leader, etc.). Others are business relationship focused (with a leader supporting one area of the business and another leader supporting a different area of the business, etc.). In MSIT, it is process focused (with each leader supporting a section of the value chain). Regardless, it would be a rare CIO who could afford to set up his leadership team to follow the exact same structure as needed to create a balanced governance model.

In fact, the CIO doesn’t have to actually sit on the IT Governance board. It is quite possible for this board to be a series of delegates, one for each of the core governance areas, that are trusted by the CIO and his or her leadership team.

Decisions by the IT Governance board can, of course, be escalated for review (and override) by a steering committee that is business-led. CobiT calls this the IT Strategy Committee and that board is chaired by the CEO with the CIO responsible. That effectively SKIPS the CIO’s own leadership team when making governance decisions.

And that is valuable because, honestly, business benefits from architecture. IT often doesn’t.

So let’s consider the idea that maybe, just maybe, the whole idea of an ARB is flawed. Architecture is a cross-cutting concern. It exists in all areas. But when the final decision is made, it should be made by a balanced board that cares about each of the areas that architecture impacts… not in a fight between the guys with the vision and the guys with the money. Money will win, every time.

![]()