Link: http://weblog.tetradian.com/2017/07/08/evidence-based-architecture/

Flicking through the BMJ again this week, I noticed an editorial on ‘EBM manifesto for better health’ (cite/URL: BMJ 2017;357:j2973), where ‘EBM’ stands for ‘Evidence-Based Medicine’.

I’ve often noted that medicine and enterprise-architectures share several key characteristics, in particular an awkward mix of mass-sameness and mass-uniqueness. For humans, everyone is the same species, yet everyone is different, sometimes ways that vary from simple(ish) (such as sex-type) to very complex or even unique (rare contexts, conditions, diseases, disabilities). Much the same applies to organisations: there’s a lot of sameness in there – such as imposed by law or industry-standards – yet also real uniqueness – if only as a means to confer ‘competitive advantage’.

In which case, what might enterprise-architects learn from the push towards evidence-based medicine?

The focus of concern in the article was about ‘bad science, bad decisions’ – and its catalogue of known-problems was pretty damning, to say the least:

Serious systematic bias, error, and waste of medical research are well documented . … Most published research is misleading to at least some degree, impairing the implementation and uptake of research findings into practice. Lack of uptake into practice is compounded by poorly managed commercial and vested interests; bias in the research agenda (often because of the failure to take account of the patient perspective in research questions and outcomes); poorly designed trials with a lack of transparency and independent scrutiny that fail to follow their protocol or stop early; ghost authorship; publication and reporting biases; and results that are overinterpreted or misused, contain uncorrected errors, or hide undetected fraud.

(The references for each those assertions, and those in the other quotes below are in the article on the BMJ website – though unfortunately behind a paywall.)

The problems are not solely with the researchers themselves:

Poor evidence leads to poor clinical decisions. A host of organisations has sprung up to help clinicians interpret published evidence and offer advice on how they should act. These too are beset with problems such as production of untrustworthy guidelines, regulatory failings, and delays in the withdrawal of harmful drugs.

Taken together, these problems can and do lead to dire consequences for organisations, payers and, of course, for patients:

Collectively these failings contribute to escalating costs of treatment, medical excess (including related concepts of medicalisation, overdiagnosis and overtreatment), and avoidable harm.

Now read those quotes again as if for an enterprise-architecture context. For example, replace ‘patient’ with ‘client’, or ‘clinicians’ with ‘change-agents’, or ‘drugs’ with ‘frameworks and methods’. One equivalent of ‘medicalisation’ is the obsessive over-hyping of and over-reliance on IT-based ‘solutions’ – to which ‘overdiagnosis’ and overtreatment would equally apply. As you’ll see, it all fits our field exactly.

Ouch.

So what should we do about it? Well, here’s the ‘EBM manifesto for better health‘ that was included in the BMJ editorial:

- Expand the role of patients, health professionals, and policy makers in research

- Increase the systematic use of existing evidence

- Make research evidence relevant, replicable, and accessible to end users

- Reduce questionable research practices, bias and conflicts of interests

- Ensure drug and device regulation is robust, transparent and independent

- Produce better usable clinical guidelines

- Support innovation, quality improvement, and safety through the better use of real world data

- Educate professionals, policy makers and the public in evidence based healthcare to make an informed choice

- Encourage the next generation of leaders in evidence based medicine

Again, it’s easy enough to translate this into enterprise-architecture terms – and yes, it’s certainly something we need. Urgently.

Yet there’s a nasty little booby-trap hidden in that, which many people in business would still miss – and that’s the word ‘replicable‘.

Replicable and repeatable is what we need in the sciences. It’s what we must have in any area where things stay the same.

But that’s the catch: in many areas in business, things don’t stay the same. (Some of those we want – for example, the subtle differences from others that provide us with commercial differentiation or commercial-advantage.) In those aspects of the business, there is no repeatability – or not much repeatability, anyway. And without that, we can’t have replicability either. That’s why applying someone else’s ‘best practices’ is usually a fair bit more fraught than the big-consultancies that sell them would often purport.

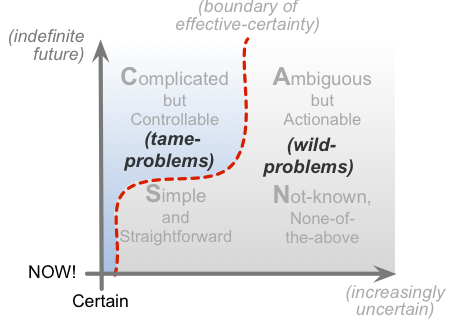

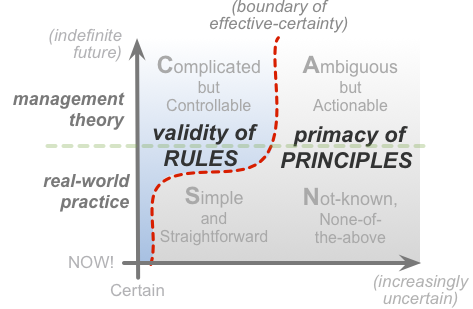

This is also where the distinction between ‘tame-problems’ and ‘wild-problems’ (‘wicked-problems’) comes into the picture: tame-problems are replicable, whilst wild-problems aren’t – or rather, the patterns for wild-problems can be somewhat replicable, but the content and ‘solutions’ for each are (usually) not. In SCAN terms, we could summarise it like this:

The role of evidence, and even evidence itself, becomes far more complex and problematic as soon as we cross over the boundary into wild-problem territory, the various domains that incorporate some form of inherent-uncertainty. Which means that the ways we work with evidence, re-use evidence, re-apply evidence, in those areas, becomes more inherently more complex too:

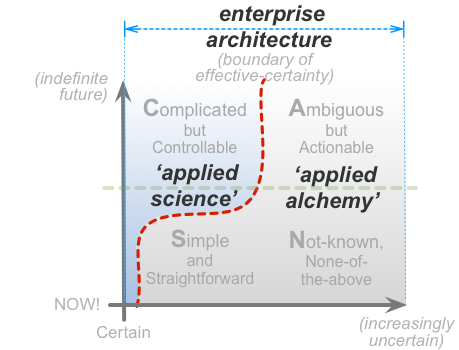

In fact, as we transition over that boundary, we necessarily shift from ‘applied science’ to something more akin to alchemy. The crucial point to understand, though, is that, overall, enterprise-architecture necessarily includes both of those – often both at the same time:

Which gets kinda tricky…

Which in turn means that for enterprise-architecture – and probably for medicine too – we’d need to add at least one more line to that ‘EBM Manifesto’ above:

- Make the replicabilities, inherent-uncertainties, applicabilities and applicability-constraints of research evidence relevant, replicable, and accessible to end users

Applying that amended manifesto to our own work should help to give us an evidence-base for enterprise-architecture and the like that we can actually use.

Over to you for comments, perhaps?