Link: http://weblog.tetradian.com/2015/01/19/theory-and-metatheory-in-entarch/

What’s the role of theory in enterprise-architecture? Could there be such as thing as ‘the theory of enterprise-architecture’? Can we use that theory, for example, to separate useful EA models from useless ones?

It seems that Nick Malik thinks so, as per his recent post ‘Moving Towards a Theory of Enterprise Architecture‘. Within that article, he references a post of mine from a couple of years back, ‘The theory of enterprise-architecture‘, which he interprets as follows:

[Tom Graves] posits that the theory of EA, if one were to be described, cannot be used to prove the value of an EA design. The not-so-subtle hint is that there is, therefore, no value in creating a theory at all.

Ouch.

Because an implied assertion that “theory has no value in EA” is not what I’d meant. At all.

But if he’s interpreted it that way, then I evidently haven’t communicated well enough what I actually meant.

Oops.

And given that many people in ‘the trade’ these days would typically view me as a theoretician or methodologist, then if I’m rejecting theory outright, as Nick implies, that would mean that I’d be denying even the existence of my own role. Which would be kinda tricky… ![]()

The quick summary, then, is that the reality is quite a lot different than he’s implied – which also means I do need to explain it better! But first:

- read Nick’s post ‘Moving Towards a Theory of Enterprise Architecture‘;

- then read my earlier post ‘The theory of enterprise-architecture‘ as if as a critique of Nick’s post (even though it’s from a couple of years earlier);

- then come back here.

(I’d say you’d be best to do it in that order, otherwise some of this might not make full sense – though with luck it should stand well enough on its own anyway.)

I’ll start by quoting from that earlier post of mine:

What is the theory underlying enterprise-architecture?

My own view is that an awful lot depends on what’s meant by ‘theory’, or ‘underlying’, or even the use there of the word ‘the’. And that’s before we even get close to The Dreaded Definitional Problem of defining what we mean by ‘enterprise-architecture’…

What worries me is that implicit in the question “What is the theory underlying EA?” is an assumption that in terms of how we use theory, we’re still operating solely within the linear-paradigm: for example, that there must somehow be an identifiable linear or ‘deterministic’ chain between [apparent] cause and [apparent] effect.

Reality is that that just ain’t how it works here.

Note that I’m not dismissing theory outright. Instead, what I’m saying there is that within contexts such as enterprise-architecture, we need to regard theory as ‘problematic’, in the formal methodological sense: theory itself as a methodological concern within which we need to be really careful about our assumptions and paradigms and the like.

So yes, actually, I do doubt that we could – or even should attempt – to derive a single ‘the theory of enterprise-architecture’, because experience shows that such theories are invariably based on undeclared assumptions and unexamined paradigms. Instead, what we need for enterprise-architecture is a much more solid understanding of metatheory – literally, ‘theory of theory’ – which will help us to test theory itself, and thence help us to know which type of theory to apply in any given context, and how, when and why to use that theory, and also how to link all of the different types of theory together into a unified whole.

The catch, of course, is that metatheory does tend to become seriously recursive, often running rapidly into full-on “My brain ‘urts…” territory. For example, classic linear-paradigm theory is all about purported ‘order’, whereas, to quote Douglas Hofstadter:

It turns out that an eerie type of chaos can lurk just behind a façade of order – and yet, deep inside the chaos lurks an even eerier type of order.

To put it bluntly, the short-answer to any “My brain ‘urts…” complaint is “Too bad.” – it’s what the discipline demands of us, so we’s gotta deal with it, ain’t we, whether we likes it or not? Nick’s comment that “Grown ups use proven science” is fair enough as far as it goes, yet in practice the science that we need will often look a lot more like Hofstadter’s “eerie type of chaos” than the linear-paradigm’s pretensions of ‘order’ – and even the concept of ‘proven’ often turns out to be a lot more problematic than it might seem from the cursory glance that is all it’s usually given by some supposed ‘grown up’. In short, again, it’s kinda tricky… but it’s a ‘tricky…’ that we must face properly if we’re to get anywhere with this.

To illustrate the point about just how tricky this gets, and how deep down the rabbit-hole we really need to go, let’s look at Nick’s assertion of “what I mean by a ‘theory of EA’”:

Typically, in science, we start with observations. Observations are objectively real. They should be mathematical and measurable. They exist within a natural setting and may vary from one setting to another. We may observe a relationship between those observations. The goal is to explain those observations in a manner that is good enough to predict outcomes. To do this, we suggest a hypothesis, which is simply a guess. We then see if we can prove or disprove the hypothesis using data. If we cannot disprove the hypothesis, we have passed our first test. We use the hypothesis to predict new observations. We then check to see if the predictions are correct. If so, we have a useful theory.

And now let’s gently (and, I hope, respectfully) take this apart, step by step, sentence by sentence:

- “Typically, in science, we start with observations.“

Yes, agreed.

- “Observations are objectively real.“

No. I can understand why people might say that, but the reality is that it risks being seriously misleading, because every observation is in some part subjective. And that’s true even with observations that are primarily by or through physical-instruments – the closest we can probably get to genuinely ‘objective’ – because the choice and placement and configuration of instruments will itself always be in part subjective.

Read Beveridge’s classic ‘The Art of Scientific Investigation‘ – perhaps particularly, for this purpose, the chapter on ‘Observation’. Read Gerry Weinberg’s equally-classic ‘The Psychology of Computer Programming‘. Read Paul Feyerabend’s ‘Against Method‘. They all give exactly the same warning: the moment we allow ourselves to indulge in the pretence that our observations are ‘objective’, we’re likely to find ourselves in serious trouble, yet without the means to know how or why that’s so. In short, Not A Good Idea…

- “They should be mathematical and measurable.“

That’s an assertion that we could make here, yes. (Note the ‘an‘ there: indefinite-article, not definite-article. That’s important.) It’s an assertion of a characteristic of observations that, yes, is highly-desirable for some purposes. Is it an unproblematic assertion? No. As with all risks around ‘solutioneering’, we need to start from the context first, and only then decide on methods and measurements (if any) – not the other way round.

- “They exist within a natural setting and may vary from one setting to another.“

Largely agreed, though note that even this isn’t unproblematic. What do we mean by “a natural setting”? What are the consequences and potentially-unacknowledged constraints that arise from our chosen concept of “a natural setting”? When we say “may vary from one setting to another”, what are those variances? What account do we need to take of factors such as ‘variety-weather‘ – variety of the variety itself within that given context?

- “We may observe a relationship between those observations.“

Yes, we may. Given the complexity of any real-world enterprise, we’re likely to see lots and lots of apparent relationships – many if not most of which will turn out to be salutary examples of the long-established warning that ‘correlation does not imply causation‘. Hence even this isn’t unproblematic…

- “The goal is to explain those observations in a manner that is good enough to predict outcomes.“

Again, yes, that’s an assertion that we could make here. It’s actually hugely problematic, particularly around the terms ‘explain’ and ‘predict’. Both terms fit easily into the linear-paradigm, of course; but there are very good reasons why complexity-science and the soft-sciences talk more about understanding than explaining, and why the formal discipline of ‘futures’ is a plural – not the singular ‘the future’ – and explicitly rejects the linear-paradigm’s concept of ‘predicting the future’. Given that enterprise-architecture tends more to the socio-technological rather than the solely-technological, that suggests a nature – and hence theory-base – that fits more closely with complexity, soft-sciences and futures than it does with the linear-paradigm.

- “To do this, we suggest a hypothesis, which is simply a guess.“

Sort-of – though again, beware of the inherently-problematic nature of ‘explanations’ in this type of context. And whilst, yes, we could describe hypothesis as “simply a guess”, there’s actually a lot more to it than that: see, for example, the chapter on ‘Hypothesis’ in Beveridge’s The Art of Scientific Investigation, or the comments by Chris Chambers in my post ‘Hypotheses, method and recursion‘.

- “We then see if we can prove or disprove the hypothesis using data.“

In principle, yes; in practice, once again, it’s a lot more problematic than it might seem at first glance. As we’ve seen above, observations, algorithms, measurements, apparent-relationships and hypotheses all carry significant subjective elements which, if we don’t explicitly set out to surface them right from the start, can lead us straight into even such elementary traps as circular-reasoning. For example, we need to be really careful about the implications here of Gooch’s Paradox, that “things not only have to be seen to be believed, but also have to be believed to be seen”.

- “If we cannot disprove the hypothesis, we have passed our first test.“

Yes, sort-of – though again we need to beware of routine rationalisation-risks such as circular-reasoning, and also the many, many forms of cognitive-bias.

- “We use the hypothesis to predict new observations.“

Again, note that the concept of ‘prediction’ in that linear-paradigm sense is highly-problematic here. (We’ll see more on that in a moment.)

- “We then check to see if the predictions are correct.“

Ditto re ‘prediction’, and ditto re ‘correct’: we can get away with this kind of simplification in a tightly-constrained, tightly-bounded context with only a handful of linear factors in play, but not in a context that has the kind of inherent complexities and ‘chaotic‘ (uniqueness etc) elements that we see routinely at play in enterprise-architectures. Given that many of the contexts that we work with can change even by the act of observing them – such as in the ‘Hawthorne-effect‘ – we need to be very much aware of how problematic any notions of ‘prediction’ can really be in real-world practice.

- “If so, we have a useful theory.“

Yes, we do – if we’ve properly acknowledged and worked with the subjective-elements and complexity-elements, that is. If not, then probably not… – in fact a real risk of something that’s dangerously misleading, the kind of fallacious ‘self-confirming prophecy’ typified by targets and similarly ‘logical’ ideas that just don’t work in any real-world business context.

Note, though, that there’s a really interesting shift implicit in Nick’s last phrase above. All the way down so far, it’s been about purported ‘proof‘, “check to see if the predictions are correct” and suchlike. In other words, the linear-paradigm’s concept of ‘true/not-true’. Yet here we shift sideways from ‘true‘ to ‘useful‘ – in effect, from ‘science’ to technology, and the fundamental shift in modes and disciplines that go along with that change. That one shift changes the entire tenor of all that’s gone before in Nick’s description of his ‘theory of EA’ – and, for the practice of enterprise-architecture, it’s a shift in theory which which I would strongly agree.

Notice, by the way, how I did that analysis above. In effect, I’ve taken almost exactly the same concept of theory that Nick has described – observation, apparent-relationship, hypothesis, evidence, test – and then applied it to itself, as theory-on-theory: in other words, metatheory. We use metatheory to identify aspects of theory that are potentially-problematic – exactly as above.

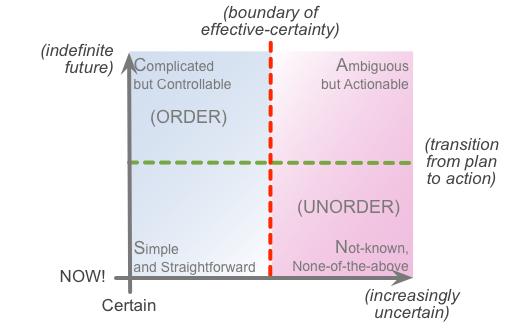

But we can also use metatheory the other way round, as a way to select appropriate theory for each given context. Let’s use the SCAN frame to illustrate this:

SCAN is an example of what I call a ‘context-space map‘ – a frame that we can use to guide or thinking about a given context. Its underlying theory – such as it is – is that we can usefully (there’s that word again) map out our understanding of a context, and what happens in that context, in terms of two distinct dimensions: distance in time from the ‘NOW!‘, the moment of action; and certainty versus uncertainty.

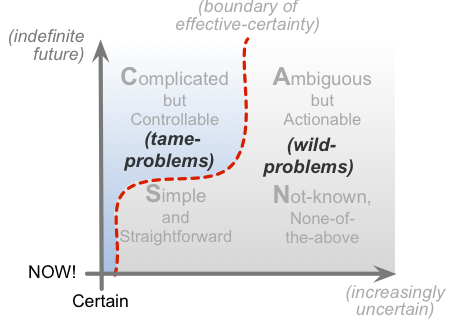

If we now map onto this frame the categories of ‘problems that are amenable to linear-paradigm models and theory’ (‘tame-problems’), and ‘problems that are not amenable to linear-paradigm models and theory’ – for example, ‘wicked-problems‘ (which these days I prefer to call ‘wild-problems‘) and inherent-uniqueness – we end up with a visual summary that looks like this:

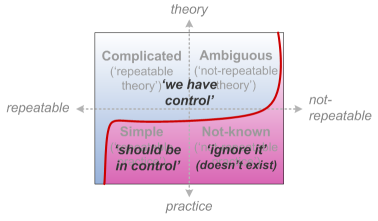

If the respective part of the context is repeatable, a truth-based or proof-based theory will work: that’s what we see over towards the left of the frame. (Notice, by the way, that truth-based models tend to work rather better in theory, somewhat away from the ‘NOW!‘, than they do in practice, right up against the real-world ‘NOW!‘…) But if the respective part of the context is not repeatable, in part or whole, then a truth-based model either will not work, or, worse, might not work – ‘worse’ in the sense that it will work well sometimes but not others, without itself being able to explain what’s happened.

Worse again, if circular-reasoning is in play – such as it too often is with the linear-paradigm – then we won’t even be able to see that the theory is wrong: instead, we’re likely to say that it’s the world that is ‘wrong’, rather than the theory. It’s a paradigm-error that is, unfortunately, very common amongst the current cohort of MBA-trained managers – leading to delusions that we might summarise visually as follows:

There’s yet another twist that we need to note here, though if we use it well, it actually provides a way round many of the theory-problems above. It’s that all of this is recursive and fractal – a point that we might illustrate via the classic yin-yang symbol:

Imagine that, in the yin-yang symbol above, ‘white’ represents sameness, ‘black’ represents difference.

Linear-paradigm theory depends on sameness: it doesn’t work well with difference.

Conversely, complexity-theory and suchlike will work best with difference: they tend to be somewhat misleading when they’re faced with too much sameness.

So to identify the right type of theory to use in any given context, we need to know the extent to which there’s sameness at play, versus the extent of difference. Hence for this purpose, we use metatheory to select appropriate theory, according to the mix of sameness and difference in that context. We also use metatheory to help us know when to switch theories – in effect using theory itself as a type of tool.

What the yin-yang symbol tells us, though, is that not only do sameness (‘white’) and difference (‘black’) interweave with each other, but that each also ‘contains’ elements of the other, fractally, recursively.

Let’s illustrate this with the classic phrase “You can’t compare apples and oranges” – and show that, yes, we can, and no, we can’t, both at the same time. Same, and different; different, and same; all interweaving with each other; fractal, and recursive.

So yes, apples and oranges are different:

- climate: apples require cool to cold climate, oranges require warm to hot climate

- acids: apples contain malic acid, oranges contain citric acid

- fermenting: apples can be fermented to cider, oranges not fermentable as such (to my knowledge?)

- skin texture: apples have either smooth or fine-textured skin, oranges have coarser-textured skin

- skin thickness: apples have thin difficult-to-peel skin, oranges have thicker and often easy-to-peel skin

- internal structure: apples do not have a segmented structure, oranges do

- firmness on ripening: apples remain firm when ripe, oranges become somewhat yielding

- colour on ripening: ripe apples are coloured somewhere between green and red, ripe oranges are coloured orange

If we were to assume that ‘apples and oranges are the same’ within the parts of the context where those kinds of factors predominate, it’s not likely to work well. (Although that’s pretty much how many managers try to operate, with misuse of ‘best-practice’ and suchlike…)

Yet we can also note that there are many ways in which apples and oranges are the same:

- both are types of fruit

- both are grown on mid-sized trees (as opposed to bushes or tall trees or ground-spreading vines)

- both are insect-pollinated (in contrast to wind-pollinated grapes)

- both are single-fruit (in contrast to cluster-fruit such as grapes or bananas)

- both are similar-sized (relative to grapes or plums or melons)

- both have multiple seeds around a central core (in contrast to an avocado)

- both have relatively small number of seeds (in contrast to a pomegranate)

- both are ideally harvested from the tree (rather than windfalls)

- both contain similar sugars

- both can be eaten directly (without requiring special pre-treatment)

- both can be used for juicing

- both are green when unripe

For the parts of the context where these factors predominate, we probably can use a linear-paradigm model. (Many of the operations of farmers and fruit-processor businesses build upward from exactly that point.)

And then we have ‘different within same’ – apples are different from apples:

- colour: green to russet to mixed red-green to full-red

- acid-level: ‘eaters’ (low-acid) to ‘cookers’ (mid-acid) to ‘cider’ (high-acid)

- typical-size: cider (small) to eater (mid-size) to cooker (large)

- flavour: highly-distinctive ‘fruity’ flavours (many ‘cottage’ varieties, and some ‘commercials’ such as Pink Lady) to almost tasteless (the amazingly-misnamed ‘Golden Delicious’…)

- resilience to bruising: highly-resilient (Golden Delicious again) to easily-bruised (some ‘non-commercial’ varieties)

- consistency within variety: highly consistent in size, resilience and [lack of] taste (‘commercials’ such as Golden Delicious) versus higher-variance (most ‘traditional’ varieties)

And oranges are different from oranges:

- typical-size: small (satsuma, tangerine etc) to large (commercial-juicing etc)

- skin removal: easy-peel (satsuma etc) to non-peel (standard, juicing etc)

- skin-thickness: thin (juicing) to thick (satsuma)

- segmenting: easy-separation (satsuma etc) to non-separable (commercial-juicing etc)

So which approach to this would be “the theory of apples and oranges”? It should be clear from the above that it’s a question that doesn’t really make sense – yet it’s almost the exact equivalent of asking “What’s the theory of enterprise-architecture?”…

Now let’s throw in another key concern: boundaries. Everything ultimately interweaves with everything else – a point we could demonstrate quite quickly in current physics – which means that, in theory, every element is affected by an infinity of possible factors. In practice, we fudge that fact, and place arbitrary boundaries around contexts in order to be able to make sense of what seems to be going on. Which is fine, as long as we never forget that those boundaries aren’t ‘real’, so much as confines that we choose.

Consider apples and oranges: where’s the boundary? We could pick any one of those factors listed above. Or we could say that it’s about comparing single fruit, single trees, a single variety, a single orchard, a cluster of orchards, different microclimates, different fruit-crops within the same district, production for local use versus for global shipping, farmers versus fruit-processors, the food-industry as a whole, the financial aspects of globalised fruit production and distribution, the global food industry in relation to other industries – about anything we choose, really. We choose boundaries according to what we want to assess, what we want to do with that assessment – but the point is that we choose those boundaries. In assessing apples and oranges, but perhaps even more in enterprise-architecture, all boundaries are an arbitrary, subjective choice.

All of which leads us to the infamous ‘enterprise-architects’ mantra‘ – in particular, ‘It depends…‘ and ‘Just Enough Detail‘. At first glance it might not seem like it, but that ‘mantra’ actually is the real core of ‘the theory of enterprise-architecture’: metatheory as theory. ‘It depends’ is metatheory: before we can devise any appropriate theory for a context, we first need to know and understand the factors upon which it depends. And ‘Just Enough Detail’ is metatheory: we need to devise appropriate boundaries and limits that would give us just enough detail for what we need to do. But before we can do either of those, we need the last leg of that ‘mantra’: the intellectual-honesty to start off with the one phrase that so many business-folk fear the most: “I don’t know…”

Which, by a roundabout way, brings us to Nick’s ‘The EA Hypothesis’:

The EA Hypothesis: The structure of and both intentional and unintentional relationships among enterprise systems has a direct and measurable influence on the rate of potential change and organizational cost of operating and maintaining those systems.

As Nick says, “That simple statement is quite powerful”: it is indeed. And as he also says, “it demands that we create a definition for ‘enterprise system’”, methods and more. We need to look at relationships, we need to measure ‘rate of potential change’ – yet first identify what we mean by ‘rate’, potential’ and ‘change’… – and also related concerns such as ‘organizational cost’.

(By the way, I strongly agree with Nick’s aside that “Cost and quality come together to include a balance of monetary cost, effectiveness, customer satisfaction, efficiency, speed, security, reliability, and many other system quality attributes”. We definitely do need to be aware that ‘costs’ – and ‘returns’ or ‘revenues’ too – need to be modelled in much more than just monetary form alone.)

Yet jump back a step, and notice a crucial factor that is not mentioned in Nick’s list of elements of the ‘enterprise system’ that we need to assess for ‘rate of potential change’:

Clearly an enterprise system has to include socio cultural systems, information technology systems, workflow systems, and governance systems.

That’s right: management. No mention at all, other than perhaps distantly sort-of-implied in ‘governance systems’. And yet as Deming and so many others have shown, it’s probably the key factor that affects ‘rate of potential change’ and ‘organizational cost of operating and maintaining those systems’. Silo-based management-structures, for example, can make it almost impossible to connect the dots across an enterprise, whilst dysfunctional management-behaviour such as bullying, micromanagement or insuperordination can cripple innovation, change or even run-time operations. Yet nary a mention in ‘The theory of enterprise-architecture’? Oops…

Yes, okay, all of us in EA are all too well aware of why such factors so often end up being quietly shunted into the ‘do not discuss, do not even mention, it doesn’t exist, honest…’ basket. But even if we dare not mention them in the presence of our self-styled ‘masters’, we need to include those factors within our assessments – even if only ‘via stealth’ – otherwise our work risks becoming not only known-incomplete and potentially-invalid, but also potentially darn-dangerous as well.

It’s also why we need to be very careful about the use – or misuse, rather – of the definite-article. It’s not ‘The Theory of Enterprise-Architecture’, but a theory of enterprise-architecture, one amongst many that we might use; it’s not ‘The EA Hypothesis’, but merely an EA hypothesis, one amongst many that we might use – and whilst those distinctions might seem petty at first, they’re utterly crucial, because without that discipline around even something as simple as the proper use of definite versus indefinite, we could easily define ourselves into an irrecoverable mess. You Have Been Warned, etc…?

Nick ends his post with a ‘Next Steps’ section, all of which is solid good sense for that type of context: it’s what we need to do now, to build enterprise-architecture towards a true professional discipline. I strongly agree that enterprise-architecture needs a far better understanding of theory than it has at present.

Yet what’s not in his post is this crucial distinction between theory and metatheory. As a parallel example, in quality-systems the first procedure we write is the procedure to write procedures; in parts of enterprise-architecture, the first principle we establish is the principle about the primacy of principles. In the same way, before we can develop appropriate theory for enterprise-architecture, we first need a solid theory-of-theory – in other words, of metatheory for EA. For our kind of context in EA, metatheory must come before theory: and we forget that fact at our peril.